Can you give us an overview of your work?

Are we heading towards a society where we no longer have privacy?

What do you think about South Korea's approach of the crisis?

How can we make sure the GDPR stays in place?

Do you think the data gathering protocols are being sufficiently supervised and regulated?

Should governments regulate trade in global events as the current pandemic?

How can we ensure algorithms remain ethical in the fight against pandemics?

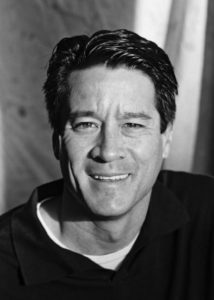

Michael Kearns is a professor and National Center Chair at the Computer and Information Science Department at the University of Pennsylvania. He's also the Founding Director of the Warren Center for Network and Data Sciences and Founder and former Director of the Penn program in Networked and Social Systems Engineering.

His research interests include topics in machine learning, algorithmic game theory, social networks, computational finance and artificial intelligence.

He is the co-author of The Ethical Algorithm, which features a set of principled solutions based on the emerging and exciting science of socially aware algorithm design.

Can you give us an overview of your work?

I have broad interests in machine learning, algorithmic game theory, quantitative finance and related areas. A particular recent focus is on designing “ethical algorithms” that embed social norms like fairness and privacy. I have a recent book on the topic with my colleague Aaron Roth.

Are we heading towards a society where we no longer have nor care for privacy?

I don’t think so, in fact I think concern over privacy is generally on the rise. The problem is that we have surrendered so much of it already, and that’s hard to reverse.

But there are promising technical approaches, including differential privacy, which has started to be deployed by tech companies, and will be used to provide privacy protections for the upcoming U.S. 2020 census.

And if we chose to do so, the vast majority of machine learning and data analysis could be conducted in a privacy-preserving fashion.

Countries such as South Korea are strongly relying on the citizens' private data to contain the spread of the virus. Information regarding their social activity and movements is being shared with the government by mobile network operators as to make decisions about whether or not to confine citizens and their social circles. What do you think about this approach?

I can certainly understand the motivation for this in light of the magnitude and stakes of the current crisis.

There also seem to be efforts to use such data not for “enforcement” but to track movements and whether social distancing is working and where. To the extent that these analyses provide valuable signals and encouragement to the public, I think that’s a good thing.

The concern over such emergency uses of private data is what is sometimes called “mission creep” — you started with one specific intention, but then as the crisis passes you find other uses of the data that are less critical and more intrusive. This of course happened infamously in the U.S. in the aftermath of 9/11.

Europe's GDPR states that 'people’s data is their own and requires anyone seeking to process it to obtain their consent'. Some countries are building exceptions as to overrule it in circumstances such as the current outbreak. How can we make sure this doesn't undermine our right to privacy?

The aforementioned mission creep would be my concern here — if regulations and laws are going to be relaxed for the current crises, the exact nature and duration of those exceptions should be spelled.

Following an article in Horizon, the EU Research and Innovation magazine, "unprecedented data sharing has led to faster-than-ever outbreak research". Do you think the data gathering protocols are being sufficiently supervised and regulated?

I don’t have any insider insight on this topic, but given the urgency of the coronavirus crisis, it would not surprise me if there’s not a great deal of supervision of anything right now, including data gathering.

We have assisted to people using inside information about the outbreak for personal profit -i.e. selling stock holdings. Could big data trade in crisis as the current be used for non ethical profit, and should it be regulated? How?

People sell assets all the time for all kinds of reasons, so I think it’s important not to speculate before the details are known. Because our understanding of the coronavirus crisis has been and remains so uncertain.

I suspect it’s going to be hard to build a strong insider trading case based on whatever the guesses were on a particular date. Obviously there will always be people trying to profit from the market volatility created by global events.

But at least in the U.S. the laws governing insider trading are pretty clear and well-tested, so I’m not sure any specific new piece of regulation is called for.

How important will IA become in the fight against pandemics, and how can we ensure this algorithms stay ethically and socially aware?

I think machine learning and statistical modelling clearly play an important role in pandemics, and indeed many in that research community are actively trying to help.

It’s important that these models be as data-driven and specific to the current crisis as possible, and informed by the underlying biology.

As per my earlier remarks, it can be hard to focus on things like privacy when one is confronted by an emergency. But as algorithmic tools to help us do so become more mature and widespread, hopefully this will be ingrained into the process without researchers needing to deliberately attend to it.