In general, the term oversight is used in public policy at different levels, implying institutional transparency, public accountability, or agency over outcomes. Current and proposed regulation does not help define such diffuse implications. In the context of this policy brief, we propose the following definition: human oversight is mainly referred to as the agency that a human operator or supervisor of an (algorithm-based) system can pose to mitigate any harm or malfunction caused by the system.

Regulation, as of now, reflects a superficial understanding of the human machine interaction. Therefore, to effectively minimise the harms of bias and discrimination in decision-making, whether it be from humans or algorithms, policymakers should first understand the risks and complexities behind the use of ADMS and how human oversight can play a meaningful role.

This policy brief seeks to inform the audience about the complexities behind human oversight, in its definition, regulation and practice. Given that there is a lot of debate on how human algorithm interaction should be regulated and if human supervision as required presently is enough to mitigate algorithmic harms, this policy brief contributes to the debate exploring both regulation and the field of studying human-computer interaction, in an effort to propose recommendations that can help create meaningful human involvement.

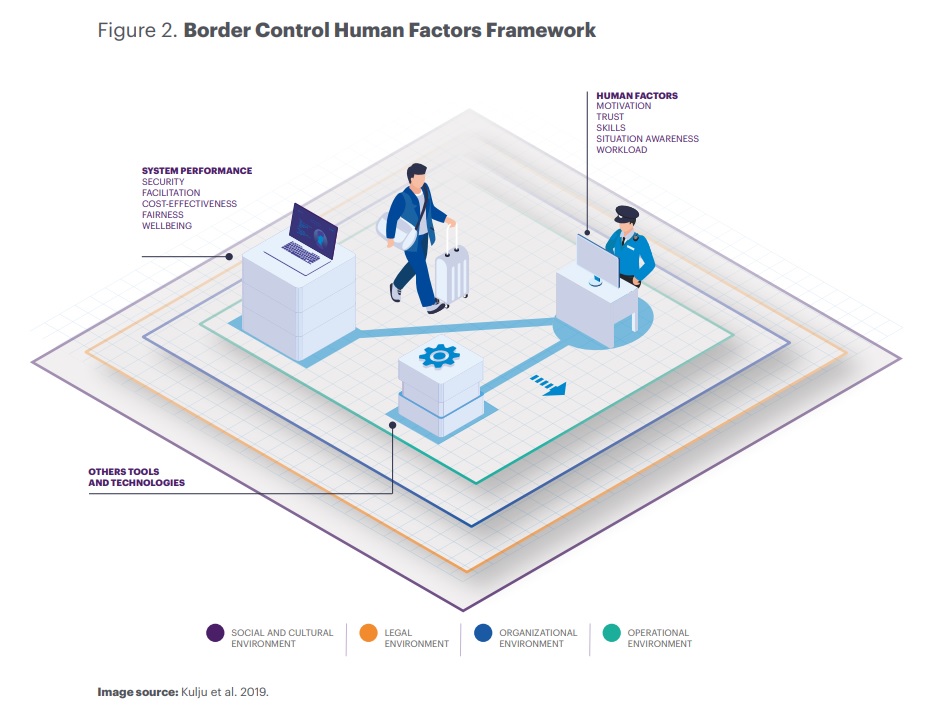

In order to understand the complex context of decision-making, academics highlight the importance to consider the many layers that encompass the use of automated decisionmaking tools. To understand this complexity requires studying how humans behave and interact with machines, and moreover, acknowledging the organisational, legal and sociocultural environment.

Within this context, there are human factors to take into account, such as the workload of the human operator, their motivation, confidence and trust in the automated tool. While, on the other hand, the performance of the system itself, which could range from its transparency, effectiveness as a tool, etc. should also be considered (Ananny and Crawford 2018; Kemper and Kolkman 2019; Zhang et al. 2020; Lee and See 2004).

For the regulation, a meaningful oversight is when operators exercise their agency while being aware of the system’s (and their own) biases or limitations. This would mean human operators are able to prevent harms if they can understand when an algorithm errs, understand why an algorithm has made a decision and account for the potential biases of the system. Therefore, in theory, for human oversight to be effective, the system design should also consider the limitations and biases of human operators.